This marks the first time a deep-learning model of its kind has successfully been used in a clinic on real patients, according to the researchers. With broad implementation, the researchers hope the model can help bring greater reliability to breast density assessments across the nation.

It’s estimated that more than 40 percent of U.S. women have dense breast tissue, which alone increases the risk of breast cancer. Moreover, dense tissue can mask cancers on the mammogram, making screening more difficult. As a result, 30 U.S. states mandate that women must be notified if their mammograms indicate they have dense breasts.

But breast density assessments rely on subjective human assessment. Due to many factors, results vary — sometimes dramatically — across radiologists. The MIT and MGH researchers trained a deep-learning model on tens of thousands of high-quality digital mammograms to learn to distinguish different types of breast tissue, from fatty to extremely dense, based on expert assessments. Given a new mammogram, the model can then identify a density measurement that closely aligns with expert opinion.

“Breast density is an independent risk factor that drives how we communicate with women about their cancer risk. Our motivation was to create an accurate and consistent tool, that can be shared and used across health care systems,” says Adam Yala, a PhD student in MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and second author on a paper describing the model that was published today in Radiology.

The other co-authors are first author Constance Lehman, professor of radiology at Harvard Medical School and the director of breast imaging at the MGH; and senior author Regina Barzilay, the Delta Electronics Professor at CSAIL and the Department of Electrical Engineering and Computer Science at MIT and a member of the Koch Institute for Integrative Cancer Research at MIT.

Mapping density

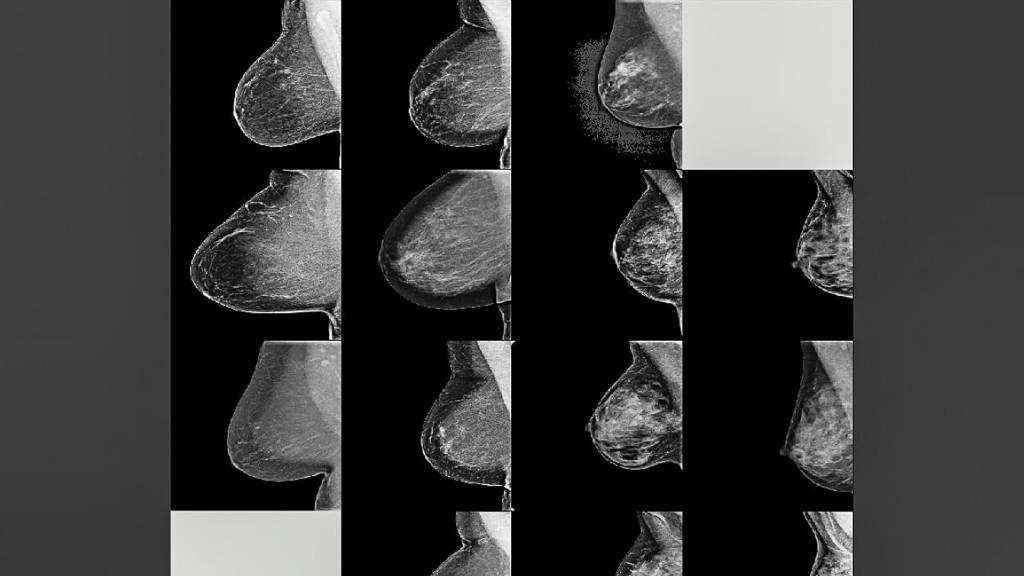

The model is built on a convolutional neural network (CNN), which is also used for computer vision tasks. The researchers trained and tested their model on a dataset of more than 58,000 randomly selected mammograms from more than 39,000 women screened between 2009 and 2011. For training, they used around 41,000 mammograms and, for testing, about 8,600 mammograms. Each mammogram in the dataset has a standard Breast Imaging Reporting and Data System (BI-RADS) breast density rating in four categories: fatty, scattered (scattered density), heterogeneous (mostly dense), and dense. In both training and testing mammograms, about 40 percent were assessed as heterogeneous and dense.The automated model makes better predictions than traditional modelsDuring the training process, the model is given random mammograms to analyze. It learns to map the mammogram with expert radiologist density ratings. Dense breasts, for instance, contain glandular and fibrous connective tissue, which appear as compact networks of thick white lines and solid white patches. Fatty tissue networks appear much thinner, with gray area throughout. In testing, the model observes new mammograms and predicts the most likely density category.