A broader and more competitive ecosystem spurred by smartphone and tablet sensor integration —forecast to hit close to $5 billion in 2016— will create massive opportunities in automotive, consumer electronics, and healthcare. Healthcare, in particular, shows the largest, untapped opportunity for eye tracking and gesture applications in patient care.

Gesture and eye tracking sensors will transform the way people interact with machines, systems, and their environment,” says Jeff Orr, Research Director for ABI Research. This will happen much in the same that touchscreens eclipsed the PC mouse. “Healthcare professionals are relying on these sensors to move away from subjective patient observations and toward more quantifiable and measurable prognoses, revolutionizing patient care.”

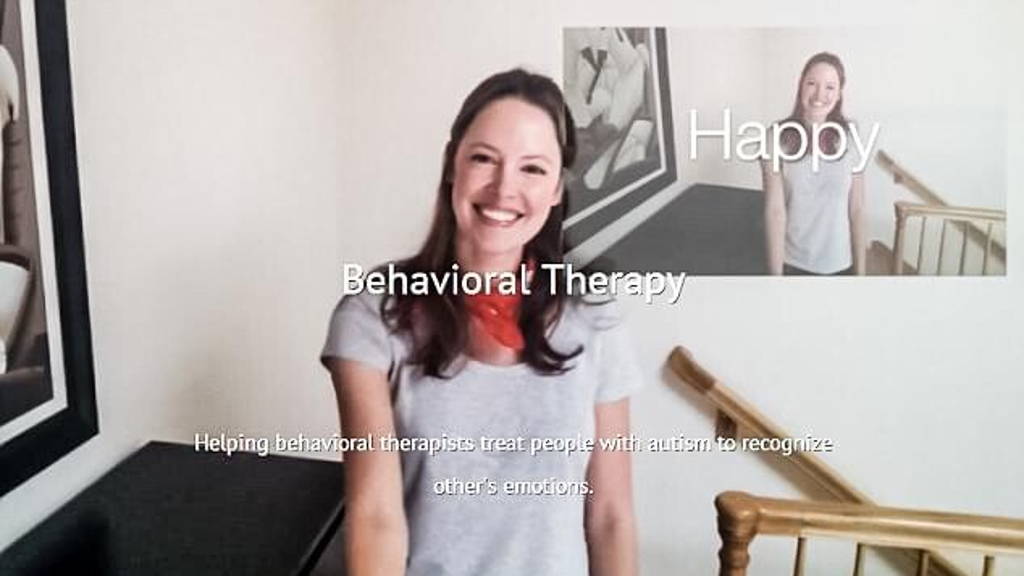

ABI believes eye tracking sensors (in for example glass wearables) can help detect concussions and head trauma, identify autism in children before they are speaking e.g. the Autism Glass experiment, and enable vision therapy programs for early childhood learning challenges to retrain the learned aspects of vision.

Similarly, gesture sensors are translating sign language into speech, providing doctors a means to manipulate imaging hands-free during surgical procedures, and providing a natural means to navigate through virtual experiences.

Human machine interface revolution

Both established and startup companies are involved in the human-machine interface revolution. Sensor innovation is stemming from Hillcrest Labs, NXP, and Synaptics, among others. Atheer, Bluemint Labs, eyeSight, Google, Intel, Leap Motion, Microsoft, Nod Labs, RightEye, and Tobii Group also all recently announced creative gesture, proximity, and eye tracking solutions.

Gesture and eye tracking sensors will transform the way people interact with machines, systems, and their environment,” says Jeff Orr, Research Director for ABI Research. This will happen much in the same that touchscreens eclipsed the PC mouse. “Healthcare professionals are relying on these sensors to move away from subjective patient observations and toward more quantifiable and measurable prognoses, revolutionizing patient care.”

ABI believes eye tracking sensors (in for example glass wearables) can help detect concussions and head trauma, identify autism in children before they are speaking e.g. the Autism Glass experiment, and enable vision therapy programs for early childhood learning challenges to retrain the learned aspects of vision.

Similarly, gesture sensors are translating sign language into speech, providing doctors a means to manipulate imaging hands-free during surgical procedures, and providing a natural means to navigate through virtual experiences.

Human machine interface revolution

Both established and startup companies are involved in the human-machine interface revolution. Sensor innovation is stemming from Hillcrest Labs, NXP, and Synaptics, among others. Atheer, Bluemint Labs, eyeSight, Google, Intel, Leap Motion, Microsoft, Nod Labs, RightEye, and Tobii Group also all recently announced creative gesture, proximity, and eye tracking solutions.