Last year, the Royal Free London NHS Foundation Trust and Artificial intelligence firm DeepMind extended an already controversial partnership by teaming up for the next five years to develop a clinical app called Streams. New Scientist published an article earlier in 2016 which told about raising concerns that the partnership had given DeepMind access to “a wide range of healthcare data on the 1.6 million patients … from the last five years.” Privacy firms also share the concern that medical records are being collected on a massive scale without the explicit consent of patients.

Earlier in 2016 Deepmind started a campaign to regain the public’s trust.

Earlier in 2016 Deepmind started a campaign to regain the public’s trust.

Shortcomings in handling of patient data

According to the ICO ruling, the Trust provided personal data of around 1.6 million patients as part of a trial to test an alert, diagnosis and detection system for acute kidney injury. An ICO investigation found several shortcomings in how the data was handled, including that patients were not adequately informed that their data would be used as part of the test.

The ICO concluded not enough was done to inform patients that their information was being processed by DeepMind during the testing phase of the app.

There was also a lack of transparency about how patient information would be used to test the new app. Therefore patients could not exercise their statutory right to object to the processing of their information.

The ICO concluded not enough was done to inform patients that their information was being processed by DeepMind during the testing phase of the app.

There was also a lack of transparency about how patient information would be used to test the new app. Therefore patients could not exercise their statutory right to object to the processing of their information.

Changes to be made

The Trust has been asked to commit to changes ensuring it is acting in line with the law by signing an undertaking. Elizabeth Denham, Information Commissioner, says there’s no doubt about the huge potential that creative use of data could have on patient care and clinical improvements. But the price of innovation does not need to be the erosion of fundamental privacy rights.

“Our investigation found a number of shortcomings in the way patient records were shared for this trial. Patients would not have reasonably expected their information to have been used in this way, and the Trust could and should have been far more transparent with patients as to what was happening.

Following the ICO investigation, the Trust has been asked to:

Following the ICO investigation, the Trust has been asked to:

- Establish a proper legal basis under the Data Protection Act for the Google DeepMind project and for any future trials;

- Set out how it will comply with its duty of confidence to patients in any future trial involving personal data;

- Complete a privacy impact assessment, including specific steps to ensure transparency

- Commission an audit of the trial, the results of which will be shared with the Information Commissioner, and which the Commissioner will have the right to publish as she sees appropriate.

The Information Commissioner has also published a blog, looking at what other NHS Trusts can learn from this case. Some learnings are that the reported shortcomings in the handling of patient data were avoidable, and that a privacy impact assessment should be an integral part of any innovation where patient data is concerned.

Trust can continue using Streams app

The Royal Free London NHS Foundation Trust has replied it passionately believes in the power of technology to improve care for patients. This has always been the driving force for its Streams app.

‘We are pleased that the information commissioner supports this approach and has allowed us to continue using the app which is helping us to get the fastest treatment to our most vulnerable patients – potentially saving lives.’

The trust has co-operated fully with the ICO’s investigation which began in May 2016. ‘It is helpful to receive some guidance on the issue about how patient information can be processed to test new technology. We also welcome the decision of the Department of Health to publish updated guidance for the wider NHS in the near future.’

The Trust has accepted all ICO’s findings and has made good progress to address the areas where they have concerns. ‘For example, we are now doing much more to keep our patients informed about how their data is used. We would like to reassure patients that their information has been in our control at all times and has never been used for anything other than delivering patient care or ensuring their safety.’

The trust says it is committed to the partnership with DeepMind which they entered into in November 2016, which incorporated much of the learning from the early stages of the project. ‘We are determined to get this right to ensure that the NHS has the opportunity to benefit from the technology we all use in our everyday lives. We must embrace the opportunities which come from working with a world-leading company such as DeepMind to ensure the NHS does not get left behind.’

The trust has co-operated fully with the ICO’s investigation which began in May 2016. ‘It is helpful to receive some guidance on the issue about how patient information can be processed to test new technology. We also welcome the decision of the Department of Health to publish updated guidance for the wider NHS in the near future.’

The Trust has accepted all ICO’s findings and has made good progress to address the areas where they have concerns. ‘For example, we are now doing much more to keep our patients informed about how their data is used. We would like to reassure patients that their information has been in our control at all times and has never been used for anything other than delivering patient care or ensuring their safety.’

The trust says it is committed to the partnership with DeepMind which they entered into in November 2016, which incorporated much of the learning from the early stages of the project. ‘We are determined to get this right to ensure that the NHS has the opportunity to benefit from the technology we all use in our everyday lives. We must embrace the opportunities which come from working with a world-leading company such as DeepMind to ensure the NHS does not get left behind.’

What the investigation was about

The ICO has been looking at the way patient data was used to test Streams for safety. This process was first governed by a partnership agreement signed between DeepMind and the Royal Free NHS Foundation Trust in September 2015, which has since been superseded by an agreement signed in November 2016.

The ICO have not been investigating the live clinical use of Streams which is being carried out under the existing agreement between the Royal Free London and DeepMind and is delivering improved outcomes for patients with acute kidney injury.

The focus of the investigation has been on the Royal Free London as the data controller and the ICO raised concerns about whether we could have done more to inform patients that their information was being processed to test the safety of Streams app and the amount of information that was processed.

The focus of the investigation has been on the Royal Free London as the data controller and the ICO raised concerns about whether we could have done more to inform patients that their information was being processed to test the safety of Streams app and the amount of information that was processed.

"We take the findings of the ICO seriously and have signed up to deliver all of the undertakings – including delivering a third privacy impact assessment of our work with DeepMind, continuing to be open and transparent about how we use patient information and conducting a third party audit of our current processing arrangements with DeepMind."

Instant alert app

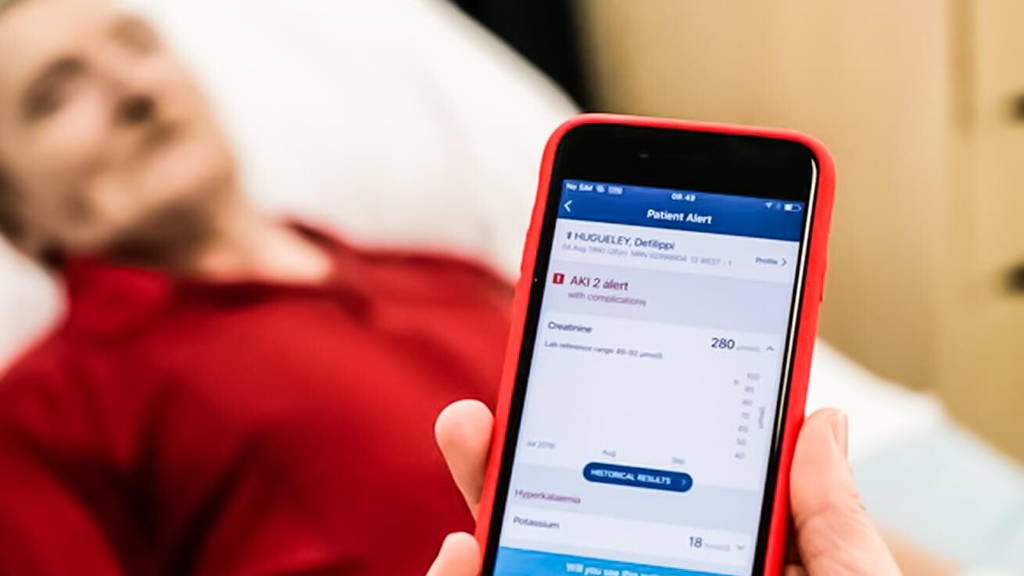

Streams is a secure instant alert app which delivers improved care for patients by getting the right data to the right clinician at the right time. Similar to a breaking news alert on a mobile phone, the technology notifies nurses and doctors immediately when test results show a patient is at risk of becoming seriously ill, and provides all the information they need to take action.

Each year, many thousands of people in UK hospitals die from conditions like sepsis and acute kidney injury, which could be prevented, simply because warning signs aren't picked up and acted on in time. Streams integrates different types of data and test results from a range of existing IT systems used by the hospital.

Because patient information is contained in one place – on a mobile application – it reduces the administrative burden on staff and means they can dedicate more time to delivering direct patient care. It is currently being used by clinicians at the Royal Free London to help identify patients at risk of acute kidney injury. The app has been through a rigorous user testing process and has been registered with the Medicines and Healthcare products Regulatory Agency (MHRA) as a medical device.

Each year, many thousands of people in UK hospitals die from conditions like sepsis and acute kidney injury, which could be prevented, simply because warning signs aren't picked up and acted on in time. Streams integrates different types of data and test results from a range of existing IT systems used by the hospital.

Because patient information is contained in one place – on a mobile application – it reduces the administrative burden on staff and means they can dedicate more time to delivering direct patient care. It is currently being used by clinicians at the Royal Free London to help identify patients at risk of acute kidney injury. The app has been through a rigorous user testing process and has been registered with the Medicines and Healthcare products Regulatory Agency (MHRA) as a medical device.

Deepmind draws its own conclusions

Deepmind released its own statement concerning the ICO conclusions, welcoming ICO’s thoughtful resolution of this case, which Deepmind hopes will guarantee the ongoing safe and legal handling of patient data for Streams.

The ICO’s undertaking also recognised that the Royal Free has stayed in control of all patient data, with DeepMind confined to the role of “data processor” and acting on the Trust’s instructions throughout. No issues have been raised about the safety or security of the data.

Although the findings are about the Royal Free, Deepminds believes it needs to reflect on its own actions too. 'In our determination to achieve quick impact when this work started in 2015, we underestimated the complexity of the NHS and of the rules around patient data, as well as the potential fears about a well-known tech company working in health. We were almost exclusively focused on building tools that nurses and doctors wanted, and thought of our work as technology for clinicians rather than something that needed to be accountable to and shaped by patients, the public and the NHS as a whole. We got that wrong, and we need to do better.'

The ICO’s undertaking also recognised that the Royal Free has stayed in control of all patient data, with DeepMind confined to the role of “data processor” and acting on the Trust’s instructions throughout. No issues have been raised about the safety or security of the data.

Although the findings are about the Royal Free, Deepminds believes it needs to reflect on its own actions too. 'In our determination to achieve quick impact when this work started in 2015, we underestimated the complexity of the NHS and of the rules around patient data, as well as the potential fears about a well-known tech company working in health. We were almost exclusively focused on building tools that nurses and doctors wanted, and thought of our work as technology for clinicians rather than something that needed to be accountable to and shaped by patients, the public and the NHS as a whole. We got that wrong, and we need to do better.'

Improvements regarding Deepmind transparancy

Since then, Deepmind has worked on some major improvements to its transparency, oversight and engagement. The initial legal agreement with the Royal Free in 2015 could have been much more detailed about the specific project underway, as well as the rules they had agreed to follow in handling patient information.The agreement was replaced in 2016 with a far more comprehensive contract, and Deepmind has signed similarly strong agreements with other NHS Trusts using Streams.

It was a mistake to not publish the work when it first began in 2015, so Deepmind has proactively announced and published the contracts for subsequent NHS partnerships.

In the initial rush to collaborate with nurses and doctors to create products that addressed clinical need, not enough was done to make patients and the public aware of the work or invite them to challenge and shape priorities. "Since then we have worked with patient experts, devised a patient and public engagement strategy, and held our first big open event in September 2016 with many more to come."

It was a mistake to not publish the work when it first began in 2015, so Deepmind has proactively announced and published the contracts for subsequent NHS partnerships.

In the initial rush to collaborate with nurses and doctors to create products that addressed clinical need, not enough was done to make patients and the public aware of the work or invite them to challenge and shape priorities. "Since then we have worked with patient experts, devised a patient and public engagement strategy, and held our first big open event in September 2016 with many more to come."